Overview

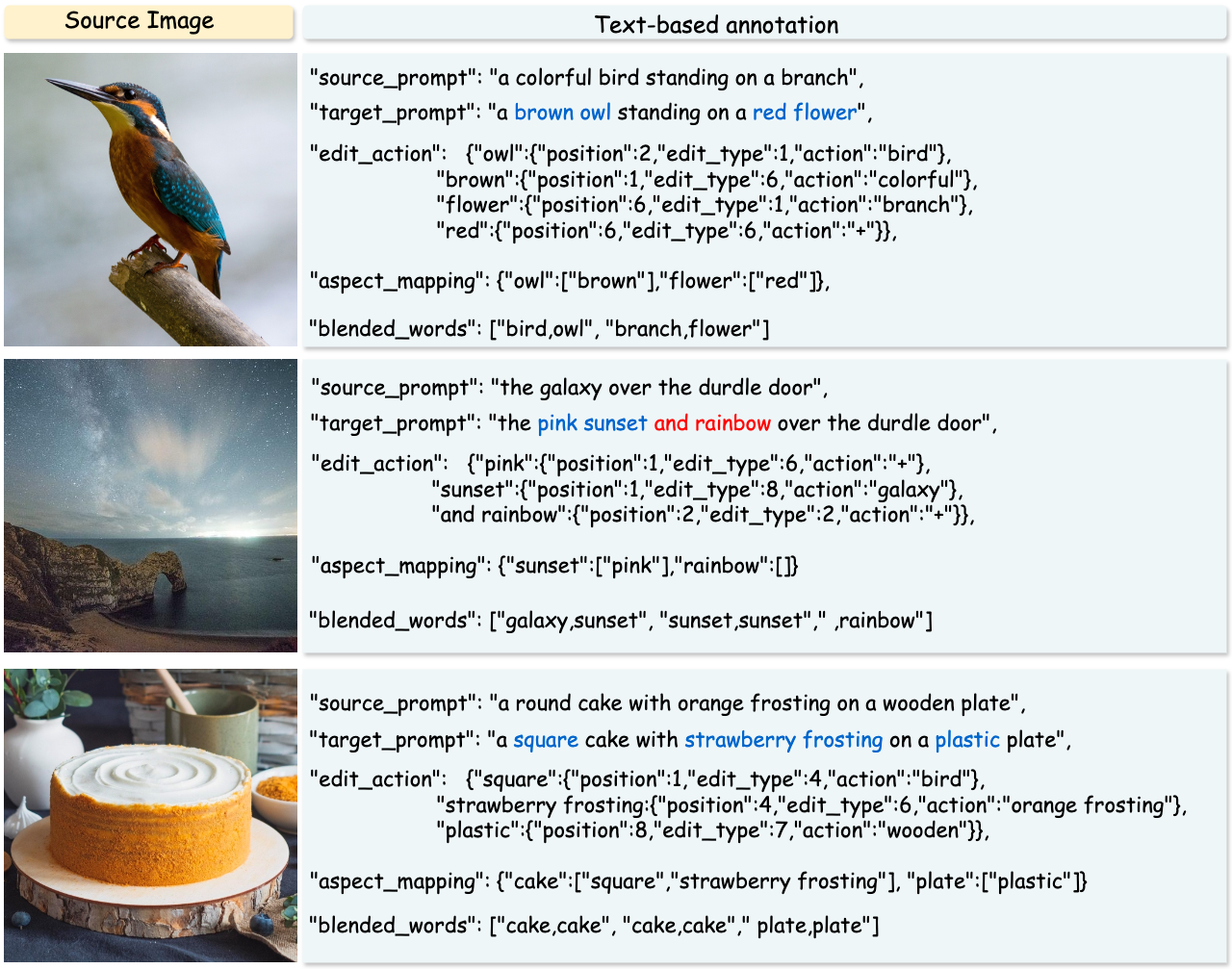

Our dataset annotations are structured to provide comprehensive information for each image, facilitating a deeper understanding of the editing process. Each annotation consists of the following key elements:

- Source Prompt: The original description or caption of the image before any edits are made.

- Target Prompt: The description or caption of the image after the edits are applied.

- Edit Action: A detailed specification of the changes made to the image, including:

- The position index in the source prompt where changes occur.

- The type of edit applied (e.g., 1:change object, 2:add object, 3:remove object, 4:change attribute content, 5:change attribute pose, 6:change attribute color, 7:change attribute material, 8:change background, 9:change style).

- The operation required to achieve the desired outcome (e.g., '+' / '-' means adding/removing words at the specified position, and 'xxx' means replacing the existing words.

- Aspect Mapping: A mapping that connects objects undergoing editing to their respective modified attributes. This helps identify which objects are subject to editing and the specific attributes that are altered.

Example Annotation

Here is an example annotation for an image in our dataset:

{

"000000000002": {

"image_path": "0_random_140/000000000002.jpg",

"source_prompt": "a cat sitting on a wooden chair",

"target_prompt": "a [red] [dog] [with flowers in mouth] [standing] on a [metal] chair",

"edit_action":

{"red":{"position":1,"edit_type":6,"action":"+"}},

{"dog":{"position":1,"edit_type":1,"action":"cat"}},

{"with flowers in mouth":{"position":2,"edit_type":2,"action":"+"}},

{"standing":{"position":2,"edit_type":5,"action":"sitting"}},

{"metal":{"position":5,"edit_type":7,"action":"wooden"}},

"aspect_mapping": {

"dog":["red","standing"],

"chair":["metal"],

"flowers":[]},

"blended_words": [

"cat,dog",

"chair,chair"

],

"mask": "0 262144"

}

}